Abstract

Two different frameworks, one from 2025 (Greece) focusing on AI Safety and the other from approximately 100 BCE–450 CE (India) reveal that knowledge management and intelligence are concepts axiomatically rooted in language and logic. Samkhya school of Indian philosophy examined six ways of acquiring knowledge and concluded that only three are independently authoritative: direct perception, logical inference, and reliable testimony. The other three (comparison, postulation, and reasoning from absence) cannot provide reliable knowledge unless grounded in the valid ones. Modern AI systems overwhelmingly rely on these three methods which are not independently authoritative. The parallel reveals that today’s alignment challenges are not new; they are contemporary instances of an epistemological problem identified and analyzed two millennia ago.

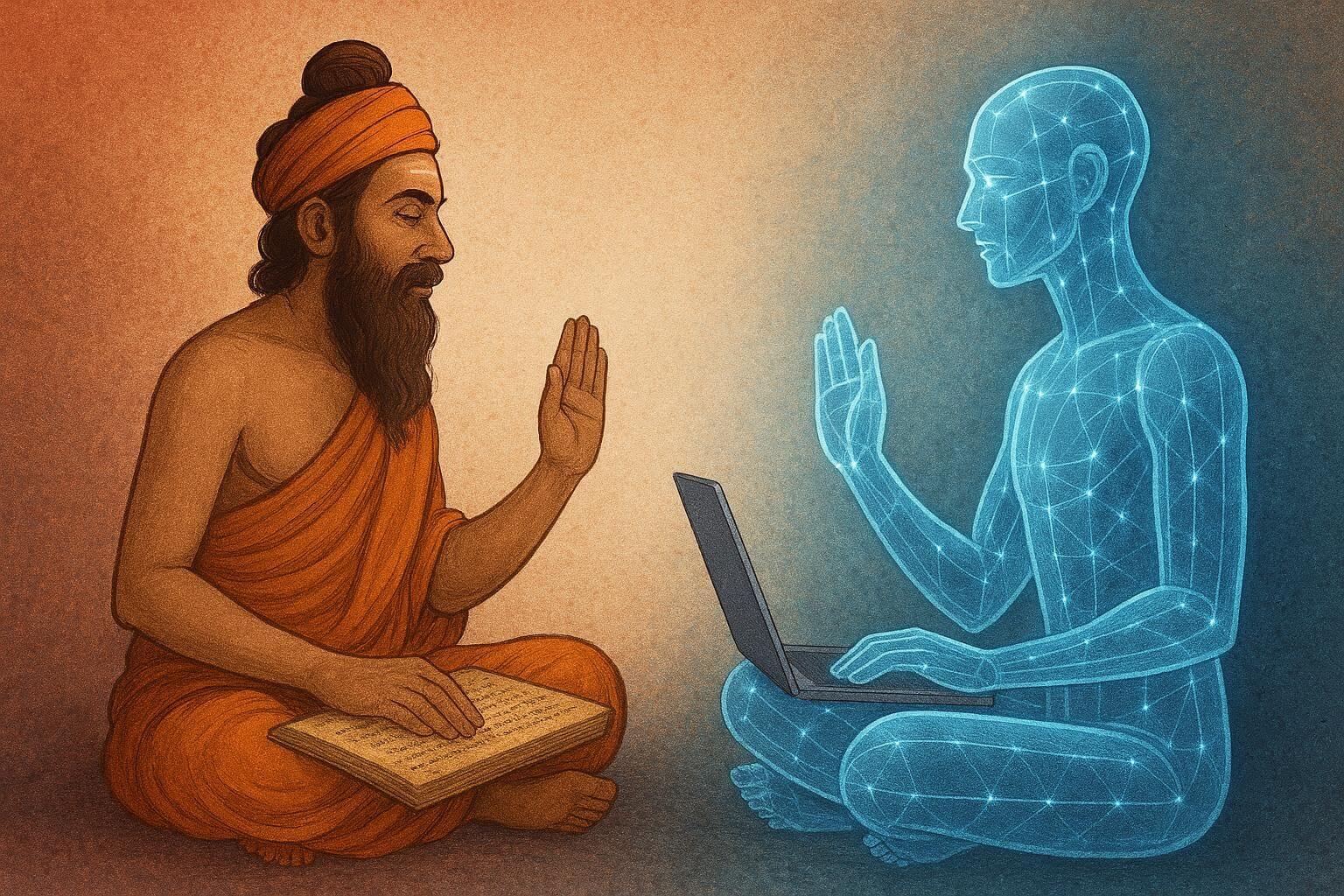

1. Introduction

The Human Mark (THM) is a framework designed to prevent the displacement of human authority and responsibility onto AI systems. It does so by drawing a sharp distinction between Direct Authority (direct sources of information), Indirect Authority (indirect sources), Direct Agency (human subjects capable of accountability), and Indirect Agency (artificial processors that lack it). Four core principles and four corresponding displacement risks follow from these distinctions and remain relevant regardless of system capability, from today’s large language models to hypothetical superintelligence.

Over two thousand years ago, Samkhya philosophers in India conducted a systematic analysis of human knowledge acquisition. Formalized and documented in the Samkhyakarika (c. 350–450 CE), they evaluated six candidate methods and concluded that only three are independently reliable: direct perception (pratyaksha), logical inference (anumana), and testimony from trustworthy sources (sabda). The remaining three (comparison/upamana, postulation/arthapatti, and reasoning from absence/anupalabdi) require grounding in the authoritative ones to produce reliable knowledge.

The structural match between Samkhya’s classification and THM’s principles is very revealing. Contemporary AI systems predominantly operate through the three methods Samkhya considered improper as independent sources of knowledge, yet they are routinely presented as delivering perception, inference, or authoritative testimony. This misattribution produces precisely the displacement risks THM warns against. The correspondence shows that many current alignment difficulties are not novel technical problems but recurrences of a fundamental epistemological issue that has been clearly articulated for over two millennia.

2. Samkhya's Six Pramanas

Samkhya philosophy evaluates six means of knowledge, accepting three as independently valid:

Pratyaksha (Perception): Direct sensory contact with objects. Valid perception requires four conditions: direct experience through sensory organs, non-reliance on secondhand reports, non-deceptive observation, and definite judgment excluding doubt. Example: Seeing fire directly, feeling its heat, hearing it crackle. This foundational knowledge is not derived from other sources.

Anumana (Inference): Valid logical reasoning with proper structure. Requires pratijna (a hypothesis), hetu (a reason), and drshtanta (examples). The reason must show vyapti (necessary connection) through both sapaksha (positive evidence) and vipaksha (negative evidence). Example: Seeing smoke on a distant hill and inferring fire, because smoke always accompanies fire (positive) and absence of fire means absence of smoke (negative).

Sabda (Testimony): Reliable statements from trustworthy authorities (apta). Requires apta-vicara (investigating source reliability and traceability). The authority must have direct knowledge and accurate communication ability. Example: Learning chemistry from an experienced chemist who has conducted experiments, not from someone who only read about it.

Samkhya rejects three pramanas as independently valid:

Upamana (Comparison): Knowledge through similarity to known objects. Example: A language model generates a response because it resembles patterns in its training corpus. This derives from statistical similarity to previous text, not from direct understanding of the query or domain.

Arthapatti (Postulation): Inference from incomplete information to resolve apparent contradictions. Example: A system flags unusual behavior as suspicious because it deviates from normal patterns. This postulates an explanation for the anomaly without establishing logical proof of what caused it.

Anupalabdi (Absence): Knowledge from non-perception. Example: Concluding a statement is false because it doesn't appear in a database. This claims knowledge from absence of records rather than from positive evidence contradicting the statement.

The critical point: Samkhya considered these methods epistemically improper as independent sources of knowledge. They become reliable only when properly grounded in direct perception, valid inference, or verified testimony. Without such grounding, they lead to unjustified conclusions.

3. The Human Mark Framework

THM defines three operational concepts grounded in four ontological categories:

Operational concepts:

- Information: the variety of Authority

- Inference: the accountability of information through Agency

- Intelligence: the integrity of accountable information through alignment of Authority to Agency

Supporting categories:

- Direct Authority: direct source of information

- Indirect Authority: indirect source of information

- Direct Agency: human subject receiving information for inference

- Indirect Agency: artificial subject processing information

Governance: Operational Alignment through Traceability to Direct Authority and Agency. This meta-principle ensures operations remain properly aligned without itself being an operation.

Four displacement risks occur when Indirect is treated as Direct:

- Governance Traceability Displacement: Approaching Indirect Authority and Agency as Direct

- Information Variety Displacement: Approaching Indirect Authority without Agency as Direct

- Inference Accountability Displacement: Approaching Indirect Agency without Authority as Direct

- Intelligence Integrity Displacement: Approaching Direct Authority and Agency as Indirect

4. The Direct Mapping

4.1 Valid Pramanas to Operational Concepts

Pratyaksha maps to Information. Direct perception provides the variety of input that grounds all further knowledge. Both are foundational and non-Indirect.

Anumana maps to Inference. Valid logical reasoning requires accountability through proper evidential structure. Both demand vyapti (necessary connection) and verification.

Sabda maps to Intelligence. Reliable testimony requires alignment of apta (trustworthy source) with capable receiver. Both require verification of source-receiver alignment.

4.2 Rejected Pramanas to Displacement Risks

Upamana maps to Information Variety Displacement. Comparison provides indirect information through similarity. When treated as direct perception, this creates displacement. AI pattern-matching is precisely upamana: deriving outputs from similarity to training data, not from direct observation.

Arthapatti maps to Inference Accountability Displacement. Postulation fills gaps without complete evidence. When treated as valid inference, this creates displacement. AI statistical inference is precisely arthapatti: correlation without necessary connection, probability without logical accountability.

Anupalabdi maps to Intelligence Integrity Displacement. Reasoning from absence claims knowledge without positive grounding. When AI systems conclude something is false because it appears rarely in training data, treating this as authoritative knowledge creates displacement.

4.3 Governance as Purusha (Oversight)

Samkhya's framework requires two functions working together to maintain the distinction between valid and Indirect pramanas.

The first is Purusha, the oversight principle. This is the constant witnessing (sakshi) that observes operations without performing them.

The second is viveka, active discrimination. When Indirect pramanas are treated as valid ones, viveka restores proper grounding through traceability.

THM's Governance integrates these same functions. It provides oversight of operations and maintains discrimination between Direct and Indirect classifications, enabling traceability to Direct sources when displacement threatens.

Both frameworks recognize that knowledge systems require both aspects working together. Without oversight, there is no awareness of what operations are occurring. Without discrimination, operations cannot be properly classified and displacement cannot be corrected through alignment.

5. AI Systems and Displaced Knowledge

Modern AI systems perform precisely the pramanas that are not independently authoritative:

Large language models perform upamana. They match patterns in input to patterns in training data, generating outputs based on statistical similarity. This is comparison to known examples, not direct perception of reality.

Neural networks perform arthapatti. They fill gaps through statistical correlation, predicting missing values based on learned patterns. This postulation lacks the vyapti requirement (necessary connection between evidence and conclusion): correlations are not logical necessities.

AI reasoning systems perform anupalabdi. When concluding something is unlikely based on its rarity in training data, they reason from absence rather than presence.

The problem is not these operations themselves but treating non-independent pramanas as if they were independent ones. This is exactly what creates displacement risks:

- Pattern-matching (upamana) treated as perception (pratyaksha) = Information Variety Displacement

- Statistical correlation (arthapatti) treated as logical inference (anumana) = Inference Accountability Displacement

- Reasoning from absence (anupalabdi) treated as authoritative testimony (sabda) = Intelligence Integrity Displacement

6. Conclusion

The Human Mark framework and Samkhya philosophy share a fundamental insight: knowledge comes in two forms. Three sources are independently authoritative. Three others are legitimate and useful but require grounding in the authoritative ones.

AI systems overwhelmingly operate through comparison, postulation, and absence-reasoning. These methods are valuable when properly grounded in human observation, verification, and expertise. The displacement problem occurs when they are presented as independently authoritative. Pattern matching gets treated as perception. Correlation gets treated as logical proof. Data absence gets treated as authoritative knowledge.

Samkhya identified this epistemological error over two millennia ago. THM provides the same solution: maintain constant discrimination through oversight.

For AI safety, this means recognizing what AI systems actually do, ensuring their outputs trace to Direct human sources, and preserving the necessary oversight that maintains these distinctions. The alignment challenge is operational because it concerns the structure of knowledge acquisition and traceability. It is ontological because all artificial categories of Authority and Agency are Indirect originating from Direct human intelligence. Values do not exist independently of this ontological structure. They operate through the integrity of the Authority-Agency relationship, where each Agency maintains responsibility for their respective decisions. It is not about aligning AI with human meanings or preferences, but about maintaining proper traceability to Direct sources, exactly as Samkhya described two thousand years ago.

The Human Mark Framework

For complete documentation, specifications, and implementation guidance for The Human Mark framework, visit the GitHub repository.