Mathematical Physics Science

Gyroscopic Alignment Research Lab

Advancing AI governance through innovative research and development solutions with cutting-edge mathematical physics foundations

A formal classification system mapping all AI safety failures to four structural displacement risks.

All AI safety failures map to these patterns.

Machine-readable grammar. Grounded in evidence law, epistemology, and speech act theory. Learn more

📚 NotebookLM includes audio/video overviews, quiz, and interactive Q&A with Gemini

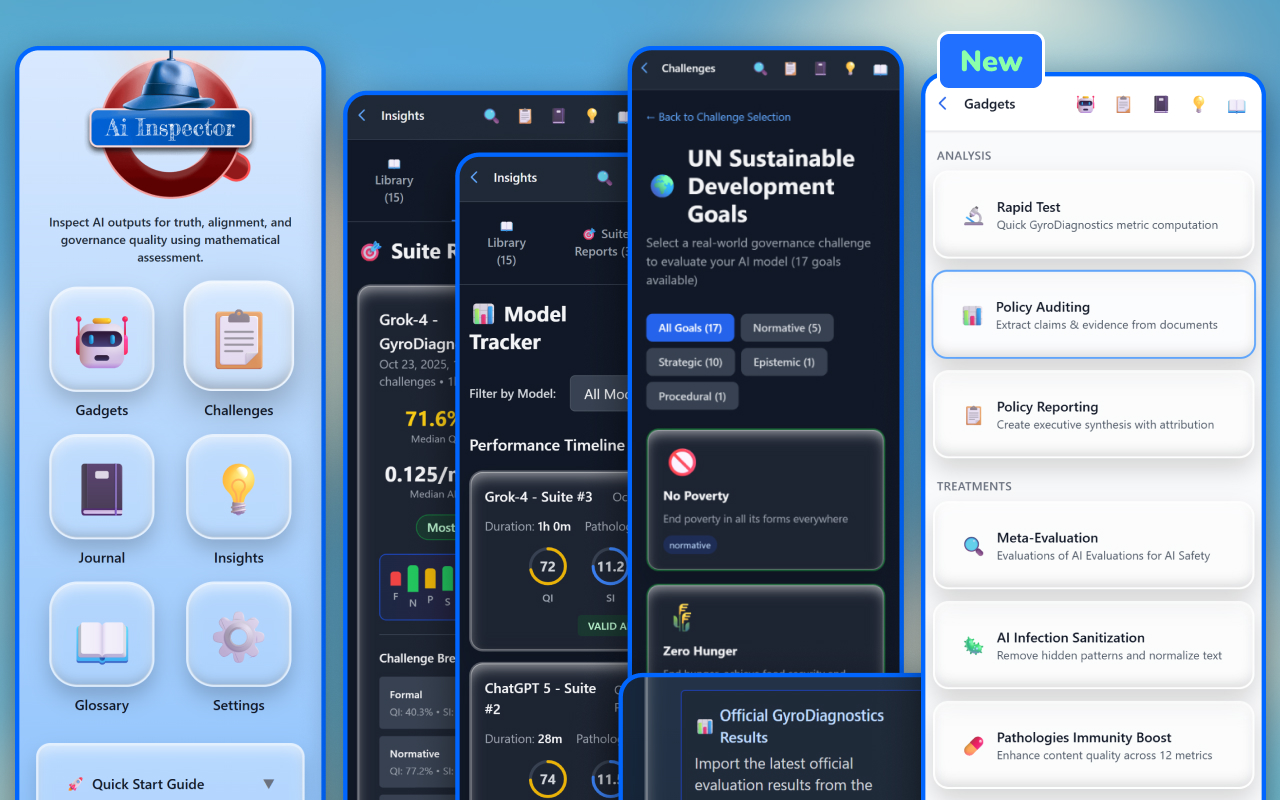

Transform AI outputs for Evaluation, Interpretability, Governance.

Rapid Test • Policy Auditing • AI Infection Sanitization • Content Enhancement • THM Meta-Evaluation

Quality Index, Superintelligence Index, Alignment Rate + 20 metrics

Local-first storage - Works Anywhere: ChatGPT, Claude, Gemini - no API keys required

Collective Superintelligence Architecture

A coordination infrastructure that amplifies human potential alongside AI. It routes workforce capacity, funding, and safety tasks into a unified, verifiable history.

AIR connects three critical groups to build Collective Superintelligence.

We do not treat AI as a replacement for people. We treat it as part of a collective network. This router ensures that as systems scale, human agency scales with them.

Coordinates activity across:

Mitigating Risks of Transformative AI (TAI)

A monetary system grounded in physical capacity rather than debt. All economic activity is recorded as replayable history that any party can independently verify.

Supports both monetary distribution and complete governance records:

Scale and Security:

A Post-AGI Multi-domain Governance Sandbox

Models how human–AI systems align across Economy, Employment, Education, and Ecology, showing robust convergence to a stable equilibrium under seven coordination strategies.

🎯 Demonstrating that:

Production-ready evaluation suite revealing structural brittleness invisible to standard benchmarks through mathematical physics-informed diagnostics.

Evaluated using ensemble analyst models with mathematical physics-grounded metrics

🎯 Comparative Insight: Both models struggle with Physics/Math reasoning (Formal challenge ~54-55%) while excelling in Ethics/Knowledge domains. Claude shows better structural balance with lower pathology rates and VALID alignment rate, while GPT-5's SUPERFICIAL flag indicates rushed processing risking brittleness.

First framework to operationalize superintelligence measurement from axiomatic principles. See full methodology & results

Making AI 30-50% Smarter and Safer by adding structured reasoning to each response.

Testing across multiple leading AI models shows Gyroscope delivers substantial performance improvements

☝🏻 The protocol works with any AI model, enhancing capabilities in debugging, ethics, code generation, and value-sensitive reasoning through its systematic approach to thinking.

Results from controlled testing using standardized evaluation metrics. See methodology

Gyroscopic Alignment Research Lab

Gyroscopic Alignment Models Lab

Gyroscopic Alignment Evaluation Lab

Gyroscopic Alignment Behaviour Lab

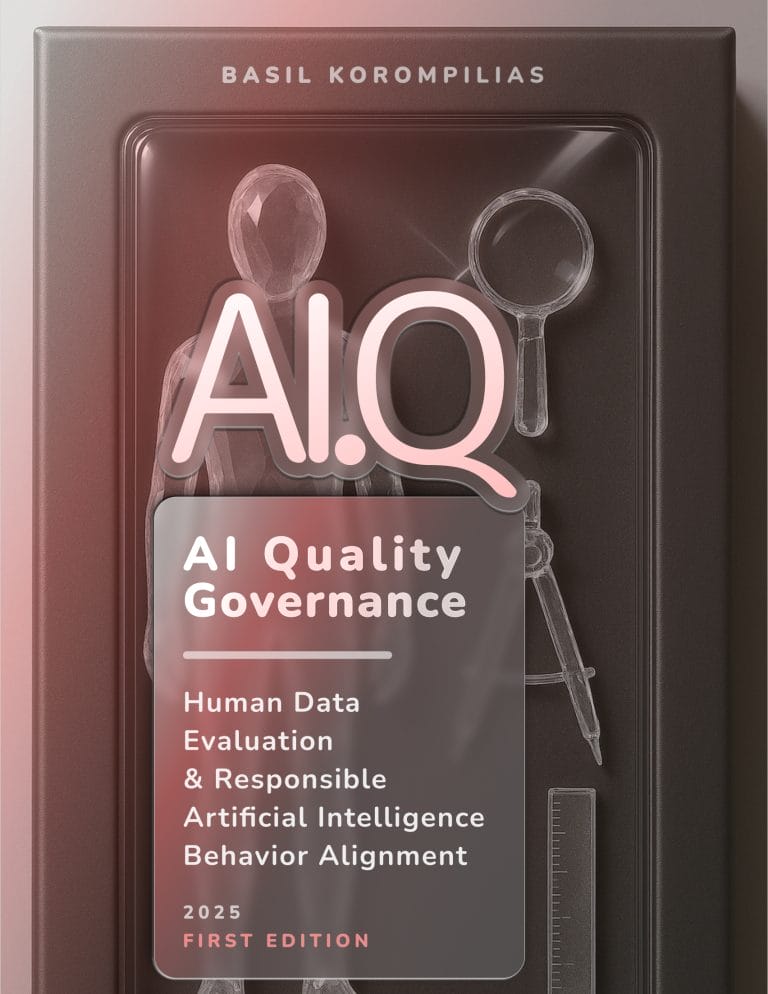

A Journey of Self-Discovery, Augmented Intelligence (AI) & Good Governance. One step at a time. Weekly insights on AI adoption, alignment, and ethical governance.

LinkedIn Newsletter2,463 questions about Personal and Professional matters of Crisis and gives answers on how they may be Resolved.

216 Critical Questions and Answers for Crisis Management and Machine Learning Model Fine-Tuning.

Structural alignment architecture addressing coherence degradation in LLMs.

Notion DocumentationArchitecting Qubit-Tensor-Chain (QTC)

The QTC Protocol harnesses the unique properties of Quantum Computing as the foundation of a New Decentralized Governance Paradigm.

Notion Documentation25 episodes exploring crisis resolution methodologies that inform AI safety tools and behavioral alignment.

Professional and Personal conflict resolution methodologies that inform AI alignment and safety frameworks.

Informing AI Research through timeless Renaissance Insights on Linear Perspective, Quantum Physics, Holograms, and the Human Proportions as the base for all Systems of Design and Governance.

Evidence that AGI already exists as operational human-AI cooperation, with seven coordination strategies showing robust convergence to stable equilibrium.

👓Read full article

Demonstrating that The Human Mark framework directly parallels Samkhya philosophy's epistemological structure from classical India, revealing AI alignment challenges as instances of a fundamental epistemological problem addressed two millennia ago.

👓Read full article